Autonomous Robotic Foosball

C++ / Nvidia Jetson / TensorRT / Arduino

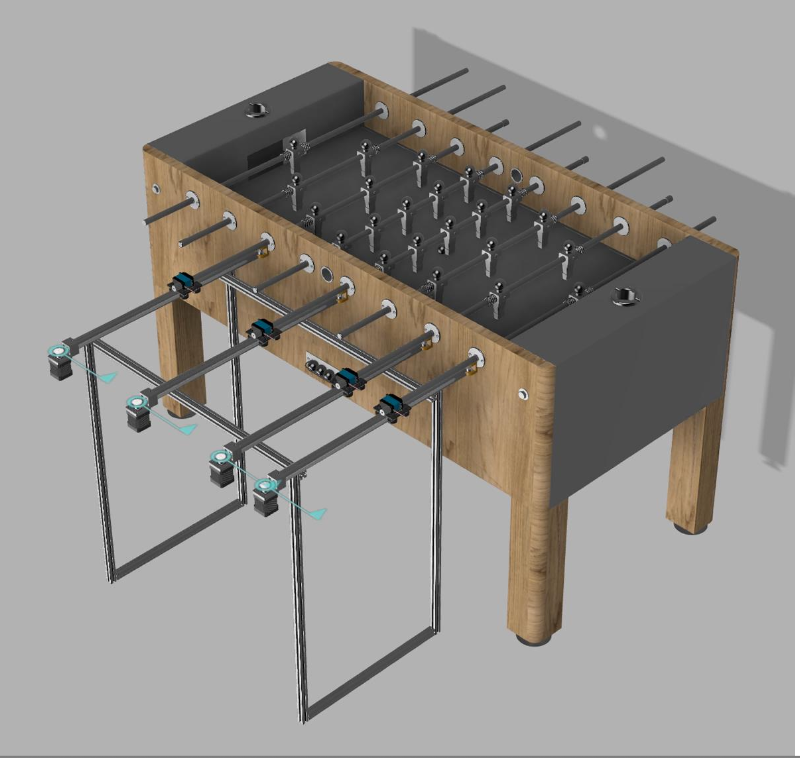

Hardware Implementation

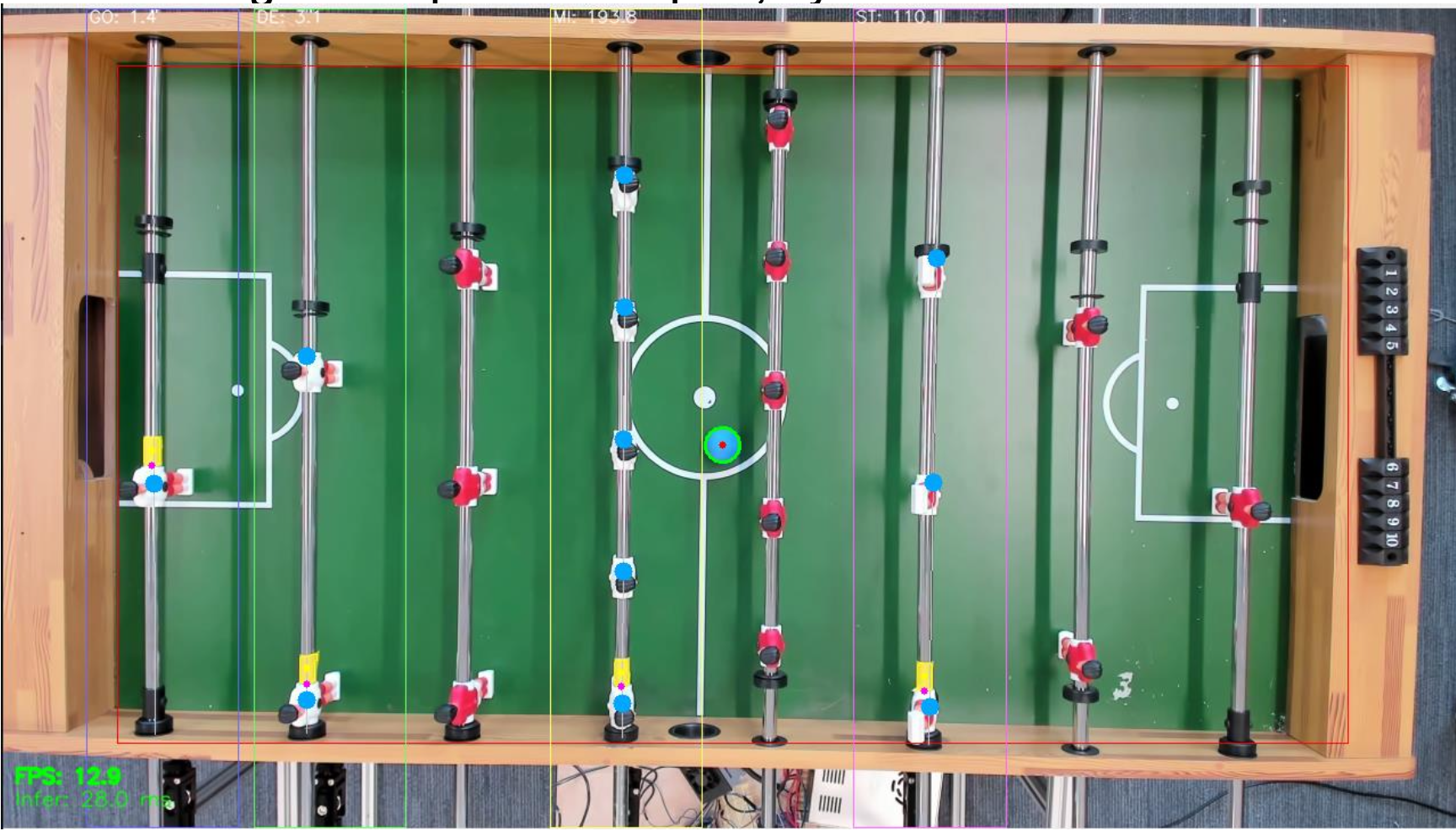

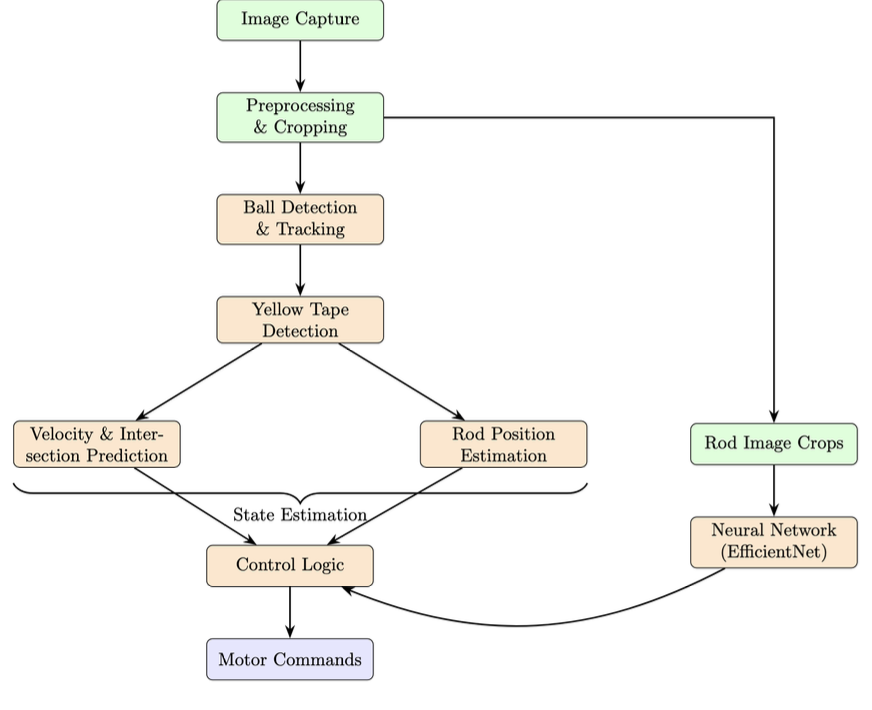

The hardware architecture splits high-level perception and low-level motor actuation. An Nvidia Jetson AGX Orin handles vision processing via a Logitech BRIO camera stream cropped to the playfield. We convert frames to the HSV color space and apply color range thresholding to isolate the blue ball and yellow end-of-rod markers. A centroid calculation on the largest contour provides the ball's position. We calculate velocity by averaging the delta over a sliding window of recent frames and project the linear trajectory to find the intersection coordinate with each rod's x-plane, transmitting move and rotate commands to the Arduino to actuate the stepper motors for lateral and rotational (kick) movement.

State Estimation & Control

For rotational control, we trained four EfficientNet-B1 regression models using a custom dataset collected by automating the motors to sweep through all angular and lateral states. We optimized these models using Post-Training Static Quantization to INT8 and exported them to TensorRT engines. The inference runs in parallel CUDA streams to maintain 30fps throughput. An Arduino Mega receives target step positions via serial. To solve stepper drift, the vision system periodically compares the y-position of the yellow tape markers against the expected motor step count. If the error exceeds a pixel threshold, the Jetson sends a RESETPOS command to the Arduino to overwrite its internal step counter, effectively closing the loop without hardware encoders.